The AI era demands a new kind of infrastructure

The computational intensity of artificial‑intelligence workloads is forcing a wholesale re‑think of data‑center design. Where a standard rack once drew 5–10 kW of power, today’s AI high‑density racks consume 30–300 kW, and hyperscale operators are preparing for 500 kW to 1 MW per rack. This surge isn’t just about bigger budgets; it’s about reinventing how we power and cool the hardware that powers AI.

What’s driving the surge in rack power

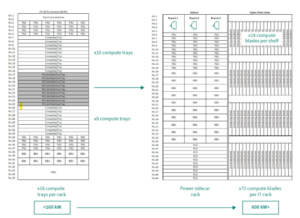

Massive GPU clusters – One major factor behind rising power density is the sheer number of graphics processors needed to train and run AI models. Large language models and generative AI rely on thousands of GPUs working in parallel. A single AI server may contain 8–16 GPUs, and hyperscalers pack hundreds of such servers into one rack. For example, Infineon’s Powering the Future of AI paper notes that clustering thousands of GPUs in a single rack can push its power draw beyond 1 MW, which is driving a shift from tray servers to blade‑server architectures.

Disaggregated AI Rack with Side-Car Power Shelves

New power architectures – Another key change is how power is delivered. Modern GPUs draw hundreds of watts and demand specialised power supplies. Traditional 48 V power shelves serve up to 100 kW per rack; however, to exceed 500 kW, operators are moving to three‑phase, high‑voltage direct‑current (HV DC) busbars and disaggregated rack designs. In these new designs, power supplies and batteries sit in side‑car enclosures beside the compute rack. Separating the power equipment frees space for compute trays and reduces current losses.

Why traditional cooling isn’t enough

Because high‑density racks produce enormous heat in a confined space, room‑level air conditioning is quickly overwhelmed. Room‑level systems top out around 20 kW per rack, and even enhanced air systems like in‑row or rear‑door units plateau near 50 kW. As AI workloads climb toward 300 kW and beyond, direct‑to‑chip liquid cooling and immersion cooling become essential. Air alone can’t reach densely packed components, and moving huge volumes of air consumes a lot of energy.

From sealed enclosures to immersion: modern cooling solutions

- Sealed enclosures with enhanced air cooling – A sealed rack with in‑row or rear‑door heat exchangers directs chilled air precisely where it’s needed. These systems work well for racks up to roughly 50 kW.

- Direct‑to‑chip liquid cooling – Cold plates attached directly to CPUs or GPUs circulate coolant to a coolant distribution unit (CDU). Industry research shows this approach supports 100–300 kW per rack and is becoming the default for AI hardware. Because liquid removes heat much more efficiently than air, it allows denser server configurations.

- Immersion cooling – Entire servers are submerged in a dielectric fluid that absorbs heat evenly. This method can support rack densities of several hundred kilowatts and is considered a bridge to the 1 MW rack era. Immersion systems almost eliminate server fans and can recycle waste heat, improving energy efficiency.

Rethinking power distribution and reliability

As cooling evolves, so must power delivery. Hyperscale operators deploy redundant CDUs with independent pumps and heat exchangers, achieving up to “five nines” availability. The latest power pods deliver 190 kW to 700 kW today and will soon reach 800 kW–1 MW. Moving from 12 kW supplies to high‑voltage shelves reduces current draw and wiring complexity, while relocating batteries and rectifiers to side cars improves rack density and maintenance access.

High-Voltage DC Power Distribution for AI Racks

Your roadmap to AI‑ready racks

Transitioning to high‑density infrastructure requires a staged approach:

- Audit your racks and workloads – Identify heat‑intensive servers and measure real‑time power draw per cabinet. This establishes your baseline for planning.

- Assess cooling headroom – Determine whether existing CRAC/CRAH units can be upgraded with in‑row or rear‑door modules. If not, plan for liquid‑cooling retrofits.

- Design liquid‑cooling loops – Budget for CDUs, cold plates, pipes and leak‑detection systems. Engage vendors with proven deployments and service capabilities.

- Upgrade power distribution – Evaluate high‑voltage busbars, side‑car power enclosures and redundant power shelves. Plan for three‑phase DC distribution to support future growth.

- Engage experts early – Partner with engineers experienced in AI and HPC workloads to design enclosures that balance performance, efficiency and reliability.

Following these steps will help your data center scale with tomorrow’s AI demands while maintaining safety and efficiency. Organisations that adopt modern enclosures, liquid cooling and high‑voltage power distribution today will be best positioned to benefit from the next decade of AI computing. If you need assistance evaluating, designing or implementing these solutions, USDC can provide guidance based on proven deployments.

📚 Sources

- 📄 Ramboll (2024). 100+ kW per rack in data centers: The evolution and revolution of power density.

Retrieved from: https://www.ramboll.com

- 📄 Data Center Dynamics (2025). Hyperscalers prepare for 1 MW racks at OCP EMEA; Google announces new CDU.

Retrieved from: https://www.datacenterdynamics.com

- 📄 Infineon Technologies (2024). Powering the Future of AI (White paper).

Retrieved from: https://www.infineon.com