Why data centers must be rebuilt—thermally and electrically—to serve AI infrastructure

From IT Support Systems to AI-Enabling Infrastructure

AI data centers are not conventional facilities running heavier workloads. They are purpose-built environments where cooling and power infrastructure define how much AI compute can exist at all.

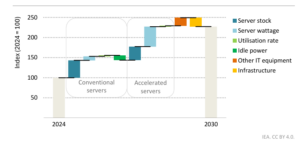

As AI models scale, rack power density has increased dramatically. Traditional enterprise data centers were designed around racks drawing roughly 5–10 kW, with relatively even power distribution and air-based cooling. Modern AI clusters, however, routinely operate at 30–50 kW per rack, while advanced GPU and accelerator platforms are already reaching 100–120 kW per rack, with higher densities on the horizon.

Industry projections suggest that average global rack density will rise from approximately 12 kW today to nearly 30 kW by 2027, while hyperscale operators are actively planning for 100+ kW racks. This shift fundamentally changes how data centers must be designed.

- Traditional data centers: ~5–10 kW per rack, distributed loads, air cooling

- AI-era data centers: 30–100+ kW per rack, highly concentrated loads, specialized cooling and power

- Looking ahead: Racks approaching 200 kW will force a full redesign of thermal, electrical, and physical infrastructure

In this context, cooling and power are no longer background engineering systems. They become primary enablers—and hard limits—of AI infrastructure scale.

Cooling for AI: When Air Is No Longer Enough

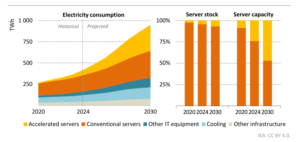

Figure: Share of data centre electricity by component (IT, cooling, UPS, networking) and projected global data center electricity use (Source: IEA, 2025).

The heat generated by high-density AI servers quickly overwhelms legacy cooling designs. In conventional data centers, cooling systems typically consume 7–30% of total facility power, depending on efficiency and site design. As rack densities rise beyond approximately 50 kW, traditional air-based approaches—CRAC/CRAH units, raised floors, and airflow optimization—are no longer sufficient to maintain safe operating temperatures.

To remove tens or even hundreds of kilowatts of heat from individual racks, AI-focused data centers are increasingly adopting liquid-based cooling architectures, including:

- Rear-door heat exchangers (RDHX): Liquid-cooled doors mounted on racks, suitable for approximately 40–60 kW per rack

- Direct-to-chip (cold-plate) cooling: Coolant delivered directly to CPUs and GPUs, supporting 60–120 kW per rack

- Immersion cooling: Servers submerged in non-conductive fluids; single-phase systems typically handle 100+ kW per rack, while two-phase systems extend beyond 150 kW

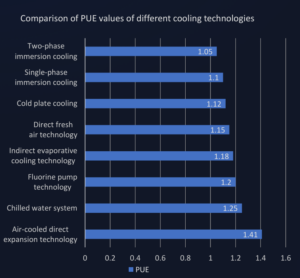

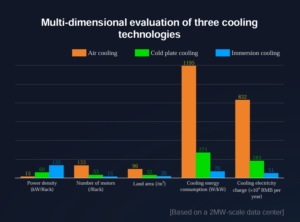

Liquid cooling does more than enable higher power density—it materially improves efficiency. Facilities using liquid-based approaches often achieve PUE reductions of around 10% compared to air-only designs. In some studies, immersion cooling reduced cooling energy consumption by 90–94% relative to conventional air systems.

Figure: PUE comparison across cooling technologies (Source: United for Efficiency, 2025)

Figure: Multi-dimensional energy and performance comparison showing immersion cooling energy savings (Source: United for Efficiency, 2025)

As AI workloads continue to push thermal limits, air-only cooling becomes impractical, and liquid cooling transitions from a niche option to a foundational requirement.

Rethinking Power Infrastructure for AI Loads

High-density AI racks also impose extreme demands on electrical distribution systems. Power architectures designed for 10 kW racks—with small PDUs and lightweight busbars—cannot safely or efficiently support 50–100+ kW loads.

To meet these requirements, AI data centers increasingly adopt megawatt-scale power architectures, where racks are grouped into dedicated power pods with their own feeders, switchgear, and protection systems. Three-phase power distribution is standard, as it significantly reduces conductor size and losses compared to single-phase configurations.

At the rack level, infrastructure must support unprecedented current levels:

- High-capacity PDUs and busway tap-offs delivering 60–100 kW per rack

- Advanced fault protection and current-limiting circuit breakers to manage extreme fault energy

- Prefabricated, high-density (“HD”) power modules to accelerate deployment and ensure reliability

Some hyperscale operators are also moving toward 48V rack-level power distribution, reducing conversion losses by as much as 25% compared to traditional 12–24V designs.

In AI-focused facilities, every layer of the electrical chain—utility interconnects, transformers, UPS systems, switchgear, and rack PDUs—must be designed around sustained, high-current operation. Power is no longer a generic utility; it is a core architectural constraint.

Advanced Infrastructure Technologies for AI-Ready Facilities

Designing data centers to serve AI infrastructure requires a tightly integrated set of technologies across cooling, power, and physical layout.

On the cooling side, operators deploy:

- In-row and in-rack cooling modules

- Hot-aisle containment and optimized airflow paths

- CFD-driven thermal modeling to manage localized heat concentration

- Facilities without raised floors, using overhead liquid distribution and containment strategies

On the power side, modularity and scalability are critical. Prefabricated power skids, combining switchgear, UPS, and distribution, enable rapid multi-megawatt expansion. High-voltage distribution (such as 480 VAC or medium-voltage systems) reduces transmission losses within large data halls.

To address grid constraints and sustainability goals, some AI-focused facilities are integrating on-site energy storage, fuel cells, or microgrids to support peak loads and improve resilience.

Monitoring and control systems tie these elements together. Dense sensor networks track temperature, coolant flow, humidity, and power at rack level, while modern DCIM platforms dynamically adjust cooling and electrical parameters to maintain stability. Importantly, these systems exist to support AI infrastructure, not to “apply AI” for its own sake.

Best Practices for Designing Data Centers to Serve AI

To successfully deploy and scale AI infrastructure, operators should adopt AI-first design principles:

- Plan by megawatts, not rack counts. Model total IT load in MW and ensure grid connections, transformers, and UPS capacity can support peak AI demand.

- Isolate high-density AI zones. Group GPU-heavy racks into dedicated halls or pods to optimize cooling and power delivery.

- Build modularly. Deploy power and cooling in scalable blocks (e.g., 1–5 MW increments) using prefabricated systems to accelerate growth.

- Prioritize efficiency and heat reuse. Every kilowatt saved in cooling directly enables more AI compute. Waste heat recovery can further improve economics.

- Design for resilience. Implement N+1 or 2N redundancy in power and cooling, with fast fault detection to protect high-value AI hardware.

- Prepare for future densities. Select low-GWP coolants, design with voltage headroom, and anticipate next-generation accelerator platforms.

- Engage early with utilities and regulators. AI-scale power demand requires coordination well before deployment begins.

Conclusion: Data Centers Rebuilt for AI

AI data centers are increasingly indistinguishable from power and thermal plants with servers on top. Electric power and heat removal—not compute hardware—now define the upper bounds of AI scale.

Facilities designed around megawatt-scale power distribution and liquid-based cooling from day one will be able to support the next generation of AI workloads efficiently and reliably. Those that rely on legacy architectures will encounter hard physical limits far sooner.

The defining shift is not AI in data center operations—it is data centers redesigned to serve AI infrastructure. Power, cooling, and physical design are no longer supporting systems. They are the foundation of the AI era.

📚 Sources

- 📄 ABB (2025). Powering the AI Revolution: Enhanced Protection for High-Density Data Center Infrastructure.

Retrieved from: https://new.abb.com/news/detail/131736

- 📄 McKinsey (2024). AI power: Expanding data center capacity to meet growing demand.

- 📄 International Energy Agency – IEA (2025). Energy demand from AI.

Retrieved from: https://www.iea.org/reports/energy-and-ai/energy-demand-from-ai

- 📄 Ramboll (2024). 100+ kW per rack in data centers: The evolution and revolution of power density.

Retrieved from: https://www.ramboll.com/insights/decarbonise-for-net-zero/100-kw-per-rack-data-centers-evolution-power-density

- 📄 Vertiv (2024). The AI data center of the future (industry analysis).

Retrieved from: https://www.vertiv.com/en-emea/about/news-and-insights/articles/ai-data-centers-of-the-future/

- 📄 United for Efficiency (2025). Cooling the AI revolution – performance of cooling technologies.

Retrieved from: https://united4efficiency.org/resources/cooling-the-ai-revolution/