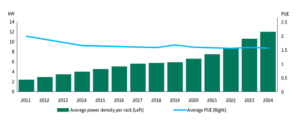

AI workloads are driving a step-change in IT load: rack power has increased from traditional ~5–10 kW to 30–100+ kW in AI clusters. (See Figure 1.)

Data centers must now be planned and measured in MW of IT load rather than rack counts.

The shift impacts power distribution, cooling design, site selection and utility relationships.

(This is Part 1 of the series “AI Data Centers: Power, Cooling, Design and the New Economics”)

AI is changing the core role of Data Centers

Average rack power density has risen sharply over the past decade and is expected to continue increasing as AI workloads drive demand for higher compute density.

Source: Macquarie Asset Management (2025)

In traditional data centers built for predictable IT workloads (ERP, databases, enterprise apps, web services), IT load was treated as a technical detail rather than a strategic factor. Racks were relatively low-power – typically 5–10 kW per rack – and power was allocated fairly evenly. Air cooling sufficed, and capacity was expanded in line with linear growth forecasts. The recent surge of AI – especially generative AI and deep learning – has rendered this model obsolete.

- Traditional data centers: ~5–10 kW per rack, uniform power distribution, air cooling suffices, expand incrementally.

- AI-driven data centers: require much higher, uneven loads focused on GPU/accelerator racks.

Generative AI and deep-learning workloads demand enormous power, fundamentally shifting data center design.

AI workloads: high density and spiky power demands

Unlike legacy IT, AI workloads rely on dense clusters of GPUs and accelerators that consume massive power. ABB reports that modern AI server racks now often draw 30–50 kW each, and in specialized AI facilities that can reach up to ~100 kW per rack. McKinsey analysis confirms a rapid rise in rack power density: average global rack density more than doubled from ~8 kW (2020) to ~17 kW (2023) and is projected to hit ~30 kW by 2027. Large AI training clusters can see even higher loads – e.g. training a model like ChatGPT can push ~80 kW per rack, and cutting-edge GPUs may demand 100–120 kW per rack.

These shifts make IT load a primary design driver: the electrical infrastructure, cooling systems, and even site selection must be planned around multi-megawatt power blocks rather than dozens of low-power racks. Data centers must now treat IT load as a core capacity metric – effectively measuring a facility in megawatts (MW) of compute power.

Data Centers are becoming “AI factories”

In the AI era, data centers are less like archives and more like manufacturing plants for compute. Here, electricity is the key raw material, and IT load represents production throughput. The scale of a data center is now expressed in MW of IT load instead of simply rack count. Key points:

- Electricity as input: Compute capacity now hinges on power availability.

- IT Load as output: Higher rack power = higher compute output.

- Scale in MW: Data center capacity measured by total MW of IT load.

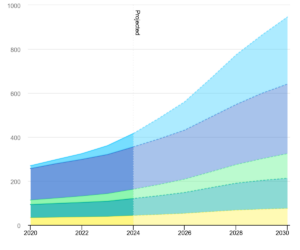

According to the International Energy Agency (IEA), global data center electricity use was about 415 TWh in 2024 (≈1.5% of world power) and is projected to double to ~945 TWh by 2030, with AI acceleration driving much of this growth. AI workloads (accelerated server usage) are expected to grow ~30% per year, far outpacing other sectors. In short, AI is the engine behind a rapidly expanding data center energy footprint.

Global data center electricity consumption (2024 → 2030 projection). Source: IEA (2024).

Rethinking infrastructure: Power distribution and cooling

Power distribution: ABB warns that racks exceeding ~30 kW each place extreme stress on traditional power infrastructure. Standard busways, PDUs and transformers may not handle these currents without upgrades. AI-focused facilities often require MW-scale power blocks, segregating AI clusters from legacy IT, and beefier redundancy (N+1 or 2N) to handle the load spikes and ensure reliability.

Cooling and thermal management: Above roughly 50 kW per rack, conventional air cooling typically fails to remove heat effectively. Industry experts (Vertiv) note current racks already exceed 20 kW on average and will surpass 50 kW. As a result, data centers are rapidly adopting advanced cooling: liquid-based solutions like direct-to-chip (cold-plate) cooling or full immersion. These methods can efficiently handle 60–150+ kW per rack. In short, air cooling gives way to liquid cooling as the standard for AI data centers.

Conclusion – Strategic perspective

AI doesn’t just increase data center demand; it redefines the data center itself around power. In this context:

- IT Load is strategic: No longer a background spec, it’s the core competency of the facility.

- Competitive edge: A data center’s ability to support extreme IT load is now a key differentiator and determinant of its future viability.

Data center operators and investors must recognize that power capacity and management are the new “prime movers” in the AI era.

📚 Sources

- 📄 ABB (2025). Powering the AI Revolution: Enhanced Protection for High-Density Data Center Infrastructure. Retrieved from: https://new.abb.com/news/detail/131736

- 📄 McKinsey (2023). AI power: Expanding data center capacity to meet growing demand. Retrieved from: https://www.mckinsey.com/industries/technology-media-and-telecommunications/our-insights/ai-power-expanding-data-center-capacity-to-meet-growing-demand

- 📄 IEA (2024). Energy demand from AI. Retrieved from: https://www.iea.org/reports/energy-and-ai/energy-demand-from-ai

- 📄 Ramboll (2024). 100+ kW per rack in data centers: The evolution and revolution of power density. Retrieved from: https://www.ramboll.com/en-us/insights/decarbonise-for-net-zero/100-kw-per-rack-data-centers-evolution-power-density

- 📄 Vertiv / ChannelPost (2024). High-density cooling best practices for data centers. Retrieved from: https://channelpostmea.com/2024/05/09/vertiv-outlines-best-practices-of-high-density-cooling-for-data-centers/